Longread

Artificial Intelligence in the Food Domain

Smart Technology, the technology that connects the real world with the digital world, is foreseen to be a huge factor in getting the agrifood domain future proof. Food security, climate change mitigation, and sustainable use of raw materials are examples of the big challenges facing all food systems. Remaining economically sustainable is and will continue to be the challenge for all actors in the supply chain!

In recent years, the world has changed significantly. The Covid-19 pandemic has happened. Serious conflicts have arisen in the world. Logistics have been disrupted. Energy and raw materials have become scarce and increased dramatically in price.

At the same time, the Smart Technology developments have not stopped developing. You can find news items about ChatGPT and Stable diffusion, two artificially intelligent solutions that create texts and illustrations that could have been made by humans [1]. You can read about autonomous vehicles, robots, and applications where intelligent agents, based on artificial intelligence developments, execute tasks of humans and do this with uncanny accuracy.

The key developments in Smart Tech for the agrifood domain in our view are (i) the availability of increasingly more advanced sensors and the resulting big data (ii) the rise of Artificial Intelligence (AI) , and (iii) the development of digital twins and decision support tools. In this longread, we focus on the developments of artificial intelligence. We give our own take on these developments, but also ask the much discussed ChatGPT-bot to give its opinion on recent developments in the form of an interview.

A short history of AI developments

The field of AI is rapidly expanding, but it is not a new domain. The term "Artificial Intelligence" is widely understood to be coined in 1956 by John McCarthy, for the Dartmouth Conference, which is considered the birthplace of AI as an independent field of study. However, the ripples of AI were evident in mid-late 1940’s. Pioneered by the English mathematician Alan Mathison Turing, since late 1940s and early 50s the notion of AI was beginning to emerge as computationally intelligent machines, machines which could learn from experience as well as solve new problems. He is well known for, amongst other things, for cracking the Enigma code during WWW II, which was pivotal in cracking the intercepted coded messages enabling the Allies to defeat the Nazis; proposing the concept of Universal Turing Machine, a theoretical device which can manipulate arbitrary symbols according to a set of rules, a foundation of modern computing; and putting forward the “Turing test”, a measure of ability of a machine to demonstrate human-level intelligence.

The Turing test is a test to see if a computer can show a level of intelligence that matches that of a human. If you communicate to someone through chat, and you cannot decide if it is a human or a chatbot with whom you are communicating, then the AI chatbot will have passed the Turing test [2].

Since 1950s, AI has developed in the scientific world. The next paragraphs are a summary from the AI timeline as explained on Wikipedia [3], sometimes faster, sometimes slower, but steadily progressing. The first popular applications were checkers or chess playing computers. Not very impressive at first when measured with respect to human players. New fields of artificial intelligence discovery started: the first text processing and translation programs were developed, the first robots were created, foundations of neural networks were programmed, first decision support systems were developed and the first popular chatbot, ELIZA, appeared by 1966. But a series of critical articles on the limitations of artificial intelligence in late 1960s an 70s stopped many of these developments and the first major ‘AI winter’ started [4]. In the 1970 many building blocks of current AI were developed: computer-understandable logic, computer vision & perception, new programming languages, better, smaller and faster computers. Even the first autonomous driving vehicle was developed, driving around indoors in the Stanford laboratory in the United States. In 1987, AI took its first steps from science to industry. A commercial management advisory system was launched. It was an expert system that used around 3000 rules to advice on strategic and financial decisions. However, the expert systems faced significant resistance and were criticised for their lack of generalizability. This was one of triggers for the second major ‘AI winter’.

In the 1990s, things started moving quickly, with results that reached the general public. Computers played backgammon, checkers, othello and chess on world class level. Deep Blue, IBM’s chess machine, defeated the world champion Garry Kasparov for the first time. RoboCup soccer matches started. Autonomously driving vehicles had test drives on real highways with real traffic. Web crawlers and search engines became available to consumers to navigate the Internet. In 2002, the Roomba vacuum cleaner was brought to the consumer market. Since 2005 you can encounter serving robots in restaurants. Since 2011, you can talk to Siri, Alexia, and their AI colleagues to have your questions answered. More difficult games, like Go and Poker are nowadays the playing field of humans and AI agents alike.

Classical machine learning and modern neural nets

We collect a lot of data in almost every process these days. Huge amounts are flowing into our hard drives and the cloud every moment. There is so much data that it has become incomprehensible by humans. So, what do we do with it?

Machine Learning is one of the ways to extract meaningful insights from this deluge of data. Machine Learning, ML, is a field of AI where computer programs have the ability to learn based on examples. Such programs can make sense out of huge number of examples (remember we have too much data for humans to understand!). Machine Learning algorithms consume many examples, process them, and learn to do the task at the human-level quality. To keep it simple we will not dive into this vast field of machine learning algorithms, their differences, pros and cons, but rather talk about the challenges this technology can solve.

AI is already widely used in a wide range of industries and business processes: ML algorithms predict the perfect moment to do maintenance of a valve in a gas pipe, serve as chatbots to direct you questions and complaints to the right department (did you try out ChatGPT?), help you to find a place based on the picture of a location, transform a photo of a document to an editable text, generate pictures by the description, and so much more! Agriculture is not an exception.

We work on a multitude of projects throughout the entire industry to bring technological advances to agriculture. To list a few: automatically sorting of fresh produce, detecting and counting fish underwater, harvesting fruits and vegetables with robots, detect diseases in plants, determine quality of fruits and vegetables, and all of these without human intervention.

Computers supporting humans in a more or less supervised way (which means that humans are needed to teach the computers the task first, before the computer can perform the task in a similar context), exist already for a long term. Nowadays, terms like deep learning and neural networks are abundant, when you read about AI developments. However, prior to the widespread use of neural networks, conventional machine learning techniques were already being successfully implemented in businesses. These methods provided companies with various insights into different aspects of their operations, automate processes, and more. However, they typically required a lot of data preparation, make multiple assumptions, and may not be suitable for a wide range of contexts. Despite these limitations, conventional machine learning methods offer benefits such as stable performance, high control, and explainability. It is not surprising that they typically require less data than neural networks.

Some examples of conventional machine learning in the agrifood domain follow below.

Example 1: Automated egg inspection

In supermarkets eggs are offered in boxes with 6 or 10 eggs. Eggs in one box have approximately the same colour and are relatively clean. This is not because all hens lay perfect eggs, but because quality inspection and sorting steps take place between egg-production and the supermarket. In industrial egg inspection lines, all eggs are selected for their shape and inspected on the presence of dirt, due to excrements, egg yolk or blood. Eggs that are too dirty or odd-shaped are discarded for the consumer market, the remaining eggs are sorted on colour and size. Until recently, this job was performed by human operators. Today, egg sorting lines have a throughput of many tens of thousands eggs per sorting line per hour. The amount of eggs that need to be inspected per second is too high even for skilled egg inspectors. Therefore, an automated computer vision system for automated visual quality inspection has been developed for this task (example taken from [5]).

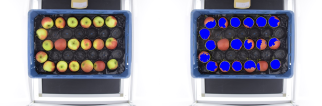

Example 2: Colour learning to inspect blos percentage of apples

One of the factors of determining apple quality is to determine the percentage of blos on apples. This process can be done automatically by making a picture of a crate of apples, allowing an algorithm to automatically separate the apple into two colour classes (green and red, or yellow and red), and determining the ‘red’ surface area as percentage. The standardised tool to do this is frequently used in quality inspection research labs.

Neural networks, also known as deep learning models, encompass a wide range of machine learning algorithms that use a specific computational approach to identify patterns in data and apply that knowledge to perform tasks. These algorithms are called neural nets, because they are inspired by the way the neurons work in the human brain. Compared to other machine learning techniques, deep neural networks are more flexible and can be applied in diverse contexts, which is made possible by the increased computational complexity of these models (and the increased computational power in our computers).

Some examples of supervised neural networks in the agrifood domain follow below.

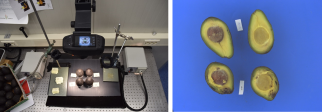

Example 3: Detecting stem-end rot in avocados

There is significant interest in monitoring and identifying decay, ripening and disease instances during transport, storage and on retailers’ shelves. One of the most common postharvest diseases to impact avocado supply chains is stem-end rot Stem-end rot is a fungal disease, and given the appropriate temperature and humidity, the fungal spores germinate and enter the fruit through the stem and infect the fruit. The damage is usually not visible from the outside and, to the naked eye, an infected avocado would appear healthy. Only after the damage is extremely severe, the infection symptoms are visible to the naked eye. In this work, we explored [hyper]spectral imaging combined with machine learning (ML) to non-destructively detect stem-end rot in Hass avocados.

In all cases, classical machine learning or deep learning, machine learning is impossible without data. In most cases data must be annotated. Annotated data means a piece of data, like an image, with some form of explanation, like a description of what is shown in an image. Annotated data enables the use of supervised algorithms. There are also unsupervised ML algorithms which function in absence of annotations. These algorithms are designed to “discover” hidden patterns in the data (i.e. data is the only guide to identify relationships and patterns) There are also self-learning ML approaches, reinforcement learning algorithms, where the artificial intelligence software learns from its mistakes by trial and error.

Interview with ChatGPT

ChatGPT is perhaps the best known application of artificial intelligence in 2023. In the news, at work, even at informal gatherings, someone is bound to bring up the possibilities of ChatGPT. We thought that it would be good to talk with such an intelligent chatbot to see what it thinks of itself in relation to the agrifood world. It is easy to start interacting with ChatGPT by yourself, by navigating to https://chat.openai.com/, registering (for free) and just type questions in your own language about any topic.

Let’s see what how ChatGPT explains what it is from its own standpoint:

Question from WFBR: I would like to know a bit more about yourself.

Answer from ChatGPT-3.5: I am an AI language model called ChatGPT. I was developed by OpenAI using the GPT-3.5 architecture, which is an advanced neural network that allows me to generate human-like responses to natural language input.

My purpose is to assist people in a variety of tasks, such as answering questions, providing information, and engaging in conversation. I have been trained on a large corpus of text from the internet, which means that I have a vast knowledge base on a wide range of topics.

Although I am an artificial intelligence, I don't have a physical body or personality like a human being. I exist purely as a language model that responds to text-based input. However, I strive to provide helpful and informative responses to the best of my abilities.

Q: I work in the agrifood domain. Can you see an application of yourself in that domain?

A: Yes, there are several potential applications of AI language models like me in the agrifood domain. Here are a few examples:

1.Crop monitoring and disease detection: AI language models can be used to analyze large amounts of data from sensors and other sources to identify patterns that could indicate potential crop diseases or other issues. This can help farmers to take proactive measures to protect their crops and increase yields.

2.Precision agriculture: AI language models can be used to analyze data on soil quality, weather patterns, and other factors to help farmers optimize their planting, irrigation, and harvesting practices. This can lead to more efficient use of resources and higher yields.

3.Supply chain optimization: AI language models can be used to analyze data on supply and demand in the agrifood industry to help companies optimize their production and distribution processes. This can help to reduce waste, lower costs, and increase efficiency.

These are just a few examples of the potential applications of AI language models in the agrifood domain. As the technology continues to evolve, there are likely to be many more opportunities to apply AI to improve the efficiency, sustainability, and safety of food production and distribution.

Q: I expect that there will be other forms of intelligent agents in play in the upcoming years. Where do you see the most potential for AI, perhaps in combination with robotics, in the agrifood domain?

A: Yes, there are indeed many other forms of intelligent agents being developed, including robotics and other AI-powered systems. In the agrifood domain, there are several areas where AI, combined with robotics, has the potential to make a significant impact:

1.Precision agriculture: Robots equipped with sensors and AI-powered systems can be used to analyze soil quality, weather patterns, and other factors to optimize planting, irrigation, and harvesting practices. This can lead to more efficient use of resources and higher yields. Robots equipped with AI-powered systems can be used to monitor crop health, detect diseases, and manage pests. This can help farmers to take proactive measures to protect their crops and increase yields.

2.Food processing and packaging: Robots equipped with AI-powered systems can be used to automate the processing and packaging of food products, reducing the need for manual labor and improving efficiency.

3.Autonomous vehicles: Autonomous vehicles equipped with AI-powered systems can be used to transport crops, livestock, and other goods more efficiently and safely. This can help to reduce the cost of transportation and improve supply chain management.

These are just a few examples of the potential applications of AI and robotics in the agrifood domain. As technology continues to evolve, we can expect to see even more innovative and impactful solutions emerge that combine AI with robotics and other intelligent systems.

Q: Can you see an example in quality inspection of fresh produce?

A: Certainly! AI and robotics have the potential to revolutionize the quality inspection of fresh produce, improving accuracy and efficiency while reducing waste.

Traditionally, the inspection of fresh produce has been done manually, with workers visually inspecting each piece of produce for defects, such as bruises or blemishes. This process can be time-consuming and subject to human error, leading to inconsistent results and increased waste.

AI-powered systems, combined with robotics, offer a solution to this problem. For example, robots equipped with cameras and other sensors can be used to automatically inspect each piece of produce as it moves along a conveyor belt. The images captured by the cameras can then be analyzed by an AI system that is trained to detect defects and sort the produce accordingly.

The AI system can be trained using large datasets of images of both high and low-quality produce, allowing it to learn to identify defects with a high degree of accuracy. As a result, the system can quickly and accurately sort the produce, separating high-quality produce that is suitable for sale from lower-quality produce that may be used for other purposes, such as making juice or compost.

This approach has several benefits. First, it reduces the need for manual labor, freeing up workers to focus on other tasks. Second, it improves the accuracy and consistency of the inspection process, reducing the risk of human error and increasing the quality of the final product. Finally, it reduces waste by ensuring that only high-quality produce is sold to consumers.

In conclusion, the combination of AI and robotics has the potential to significantly improve the quality inspection of fresh produce, leading to increased efficiency, accuracy, and sustainability.

Q: Will this take away jobs of current quality inspectors?

A: The adoption of AI and robotics for quality inspection of fresh produce may lead to a reduction in the number of manual labor jobs in the industry. However, it is important to note that the implementation of these technologies may also create new job opportunities in areas such as engineering, data analysis, and system maintenance.

While AI and robotics can automate certain tasks, they cannot completely replace the need for human workers. There will still be a need for workers to oversee the operation of these systems, perform maintenance and repairs, and ensure that the technology is functioning properly.

Moreover, the implementation of AI and robotics can actually improve working conditions for existing quality inspectors by reducing the physical strain of repetitive manual tasks and allowing them to focus on more complex and rewarding aspects of their work, such as problem-solving and decision-making.

In summary, while the adoption of AI and robotics for quality inspection of fresh produce may lead to a reduction in manual labor jobs, it is important to consider the potential benefits of these technologies in terms of improved efficiency, accuracy, and sustainability, as well as the creation of new job opportunities in related fields.

Concluding remarks

When you read the answers of ChatGPT, it may seem that a person is talking instead of an intelligent agent, which means that we are close to the Turing test criterion. It is important to note that ChatGPT combines answers from existing data, such as scientific articles, webpages, books. It combines them in a smart way, but does not create new information (yet).

We truly believe that smart technology advances and that more and more applications in the agrifood domain will be empowered by artificial intelligence. Many difficult tasks will be come possible because the power of analysing huge amounts of data is technologically possible. In the next decades, we will see a shift from work without AI assistance to work with AI assistance. The potential for the agrifood domain is huge.

References

[1] NOS, https://nos.nl/artikel/2460976-microsoft-investeert-miljarden-in-bedrijf-achter-chatgpt, website accessed March 2023.

[2] Stanford, https://plato.stanford.edu/entries/turing-test/, website accessed March 2023

[3] Wikipedia, https://en.wikipedia.org/wiki/Timeline_of_artificial_intelligence, website accessed March 2023

[4] Wikipedia, https://en.wikipedia.org/wiki/AI_winter, website accessed March 2023

[5] Koenderink, N.J.J.P., 2010, A knowledge-intensive approach to computer vision systems, PhD. thesis, https://edepot.wur.nl/238633